When conducting scientific research—whether in biology, medicine, psychology, or any other field—statistical testing plays a vital role in validating results. However, mistakes can occur during hypothesis testing, leading to wrong conclusions. Two of the most common mistakes in research are Type I error and Type II error.

Understanding these two types of errors is crucial for students, researchers, and professionals because they influence the accuracy and credibility of research findings. This article explains what Type I and Type II errors are, their causes, probabilities, differences, and real-life examples in simple, clear language.

1. Introduction to Hypothesis Testing

Before we dive into Type I and Type II errors, let us understand the basics of hypothesis testing:

- Null Hypothesis (H0):

The null hypothesis assumes there is no relationship or effect between the variables being studied.

Example: “A new drug has no effect on reducing blood pressure compared to the standard drug.” - Alternative Hypothesis (H1 or Ha):

The alternative hypothesis suggests that a relationship or effect exists between the variables.

Example: “A new drug reduces blood pressure more effectively than the standard drug.” - Decision in Hypothesis Testing:

- We either reject the null hypothesis (H0) or fail to reject it, based on the evidence from data.

- This decision is influenced by sample size, test power, and the significance level.

But sometimes, our decision can be wrong—this is where Type I and Type II errors come in.

2. What is Type I Error? (False Positive)

- Definition:

A Type I error occurs when we reject the null hypothesis (H0) even though it is true.- In simple words: we think an effect exists when in reality, it does not.

- Symbol:

Denoted by α (alpha), which is also called the significance level of the test. - Meaning in research:

It is a false positive error, meaning we wrongly accept that our treatment, experiment, or factor has an effect. - Example in biology:

- Suppose a researcher tests a new plant fertilizer.

- The experiment shows improved plant growth, so the researcher concludes the fertilizer is effective.

- But in reality, the growth was due to seasonal variation, not the fertilizer.

- Here, rejecting H0 was a mistake → Type I error.

3. Causes of Type I Error

Several factors increase the chances of committing a Type I error:

- Random chance or luck – sometimes results look significant by accident.

- High significance level (α) – if α = 0.10, you are taking a 10% risk of wrongly rejecting H0.

- Poor experimental design – lack of proper controls or randomization.

- Multiple testing – testing too many hypotheses increases false positives.

- External factors – variables other than the studied ones may influence results.

4. Probability of Type I Error

- The probability of Type I error is α (alpha), which is usually fixed before the test.

- Common values: α = 0.05 (5%) or α = 0.01 (1%).

- Example: If α = 0.05, there is a 5% chance of wrongly rejecting H0 when it is actually true.

- Trade-off: Reducing Type I error increases the chances of Type II error (false negative). Researchers must balance this.

5. Real-Life Examples of Type I Error

- Medical field: A test shows a patient has cancer when they actually do not.

- Biology research: Concluding a new pesticide works, when plant survival was actually due to weather.

- Sports science: Believing that new running shoes improve performance, while wins were due to chance.

- Psychology: Thinking a therapy works for anxiety when the improvement was just placebo effect.

These false positives can lead to wasted resources, unnecessary treatments, or misleading conclusions.

6. What is Type II Error? (False Negative)

- Definition:

A Type II error occurs when we fail to reject the null hypothesis (H0) even though it is false.- In simple words: we miss a real effect when it actually exists.

- Symbol:

Denoted by β (beta). - Meaning in research:

It is a false negative error, meaning we wrongly conclude that there is no effect when one truly exists. - Example in biology:

- A researcher studies whether a new antibiotic works better than the standard one.

- The test concludes there is no difference.

- But in reality, the new antibiotic is more effective.

- Here, failing to reject H0 was a mistake → Type II error.

7. Causes of Type II Error

- Small sample size – too few observations to detect real differences.

- Low statistical power – weak tests fail to detect effects.

- Measurement errors – poor instruments or data collection methods.

- High variability in data – noise hides real patterns.

- Very strict significance level (low α) – making tests too conservative increases false negatives.

8. Probability of Type II Error

- The probability of Type II error is β (beta).

- The power of a test = 1 – β, meaning the ability to correctly reject H0 when it is false.

- A test with high power (e.g., 0.8 or 80%) reduces the chance of Type II error.

Increasing sample size or using better experimental design can reduce β.

9. Real-Life Examples of Type II Error

- Medical diagnosis: A test fails to detect COVID-19 in an infected person.

- Ecology: A biologist concludes a pollutant has no effect on fish survival, when it actually does.

- Agriculture: Believing a crop variety is not drought-resistant when it actually is.

- Wildlife studies: Missing the presence of a rare species in a survey due to low sample size.

These false negatives can delay discoveries, harm health, or misguide conservation efforts.

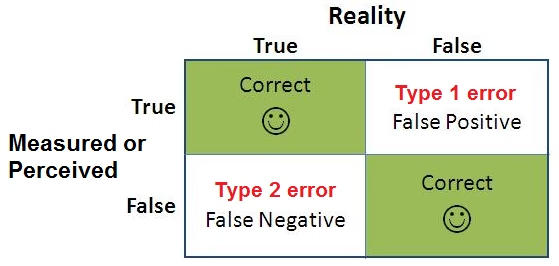

10. Differences between Type I and Type II Errors

| Basis | Type I Error (α) | Type II Error (β) |

| Definition | Rejecting H0 when it is true | Failing to reject H0 when it is false |

| Also called | False Positive | False Negative |

| Symbol | α (alpha) | β (beta) |

| Cause | Random chance, high α | Small sample size, low test power |

| Probability | Equal to α (significance level) | Equal to 1 – test power |

| Reduced by | Lowering α (stricter test) | Increasing sample size, raising power |

| Impact | Wrongly claiming an effect exists | Missing a true effect |

| Research risk | Wastes resources, false discovery | Missed opportunities, false security |

11. Trade-off Between Type I and Type II Errors

- Reducing one error usually increases the other.

- Lowering α (stricter test): decreases false positives but increases false negatives.

- Raising α (lenient test): decreases false negatives but increases false positives.

- Researchers must choose an acceptable balance depending on the consequences.

Example:

- In medical research, avoiding Type II error is often more important because missing a real treatment can cost lives.

- In basic lab experiments, avoiding Type I error may be preferred to prevent false discoveries.

12. Importance of Understanding Type I and II Errors in Biology Research

- Ensures reliable results in experiments.

- Helps in designing better studies with correct sample sizes.

- Prevents false claims in scientific publications.

- Guides ethical decision-making in medical and ecological research.

- Balances risks between false discoveries (Type I) and missed effects (Type II).

13. How to Minimize Errors in Research

- Use larger sample sizes.

- Choose an appropriate significance level (α).

- Improve study design and randomization.

- Increase statistical power with strong tests.

- Avoid multiple unnecessary testing.

- Ensure accurate data collection and reduce measurement error.

14. Conclusion

Both Type I and Type II errors are common in research methodology, but their consequences can be very different.

- Type I error (α) = false positive → wrongly detecting an effect.

- Type II error (β) = false negative → failing to detect a real effect.

A good researcher understands how to balance these two errors by carefully selecting significance levels, increasing sample sizes, and designing strong studies. This not only makes research reliable but also ensures that scientific findings truly contribute to knowledge and society.

Frequently Asked Questions (FAQs) on Type I and Type II Errors

1. What is the difference between Type I and Type II errors?

- Type I error (α): Rejecting the null hypothesis when it is actually true (false positive).

- Type II error (β): Failing to reject the null hypothesis when it is false (false negative).

2. Why are Type I and Type II errors important in research?

They determine the accuracy and reliability of scientific results. Misinterpreting data can lead to false discoveries (Type I) or missed opportunities (Type II), both of which can impact science, medicine, and decision-making.

3. How can researchers reduce Type I errors?

- Use a smaller significance level (α) such as 0.01 instead of 0.05.

- Improve experimental design and control groups.

- Avoid multiple hypothesis testing without correction.

4. How can researchers reduce Type II errors?

- Increase the sample size.

- Use powerful statistical tests.

- Reduce variability in measurements.

- Choose an appropriate α (not too strict).

5. Which error is more serious – Type I or Type II?

It depends on the situation:

- In medical research, Type II error is often worse because missing a real treatment can risk lives.

- In basic lab experiments, Type I error may be more harmful because it leads to false claims and wasted resources.

6. What does α (alpha) mean in hypothesis testing?

- α represents the probability of making a Type I error.

- Common values are 0.05 (5%) or 0.01 (1%), meaning researchers accept a small risk of rejecting a true null hypothesis.

7. What does β (beta) mean in hypothesis testing?

- β represents the probability of making a Type II error.

- A smaller β means lower risk of missing a real effect.

8. What is the power of a test?

- Power = 1 – β.

- It is the probability of correctly rejecting the null hypothesis when it is false.

- High power (e.g., 0.8 or 80%) means the study is more reliable.

9. Can we eliminate both Type I and Type II errors completely?

No. There is always a trade-off:

- Reducing Type I error increases the chance of Type II error, and vice versa.

- Researchers must balance the two depending on the study’s purpose and risks.

10. Can you give a simple real-life example of both errors?

- Type I error: A medical test says a healthy person has a disease (false alarm).

- Type II error: A medical test fails to detect a disease in a sick person (missed diagnosis).

References

- R. Kothari (1990) Research Methodology. Vishwa Prakasan. India.

- https://magoosh.com/statistics/type-i-error-definition-and-examples/

- https://keydifferences.com/difference-between-type-i-and-type-ii-errors.html

- https://microbenotes.com/type-i-and-type-ii-error/

- https://www.investopedia.com/terms/t/type-ii-error.asp

- https://www.thoughtco.com/null-hypothesis-examples-609097

- https://www.thoughtco.com/hypothesis-test-example-3126384

- https://www.stt.msu.edu/~lepage/STT200_Sp10/3-1-10key.pdf

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2996198/

Read also:

Null Hypothesis and Alternative Hypothesis – Definition, Examples & Differences

Descriptive Studies in Research Methodology – Types, Applications, Advantages, and Limitations

Chi-square Test in Research Methodology – Definition, Formula, Uses, and Examples